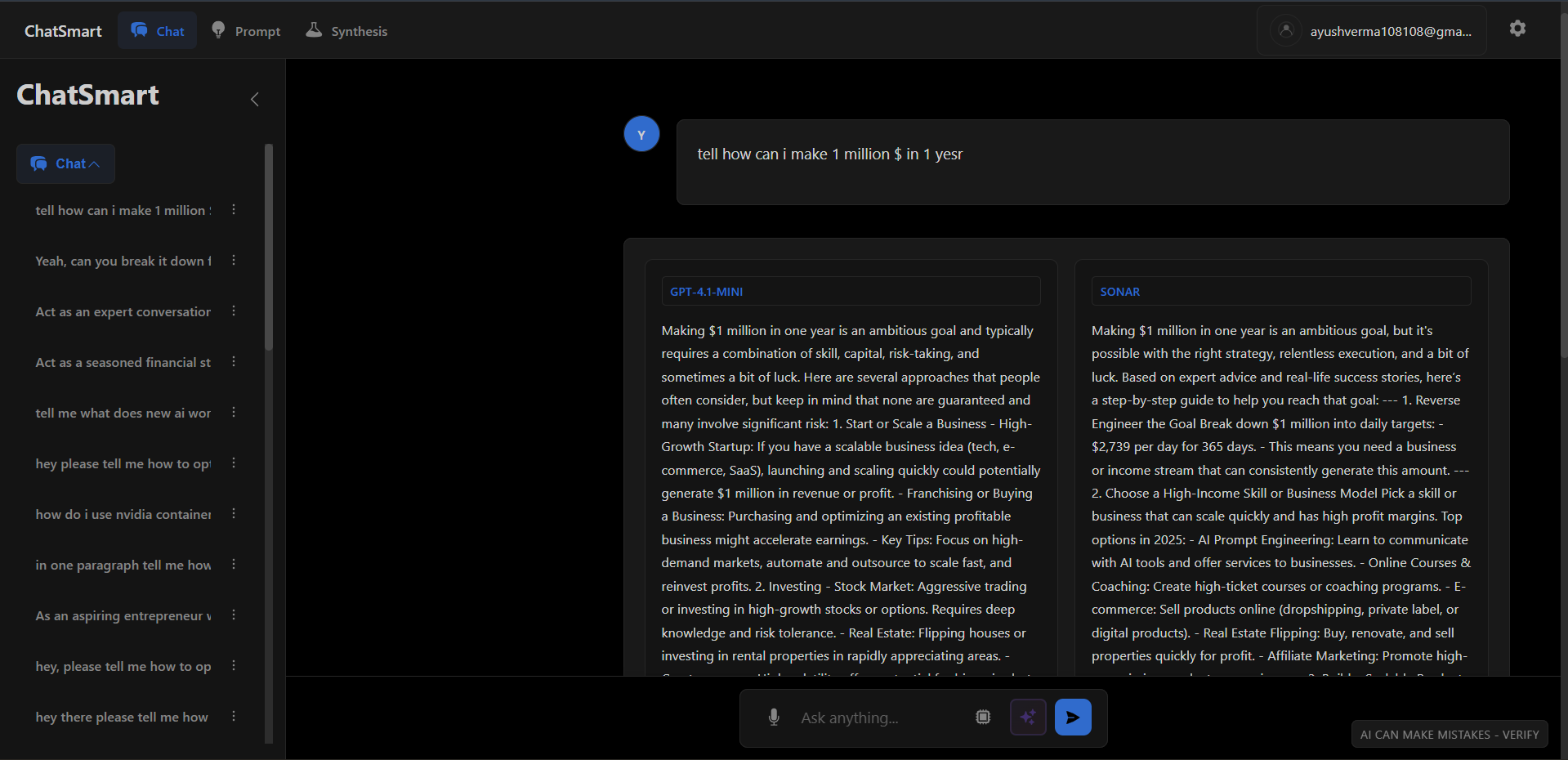

ChatSmart – Intelligent Multi-Model AI Chat Platform

Portfolio chat cockpit I use daily to compare models

Media & Demos

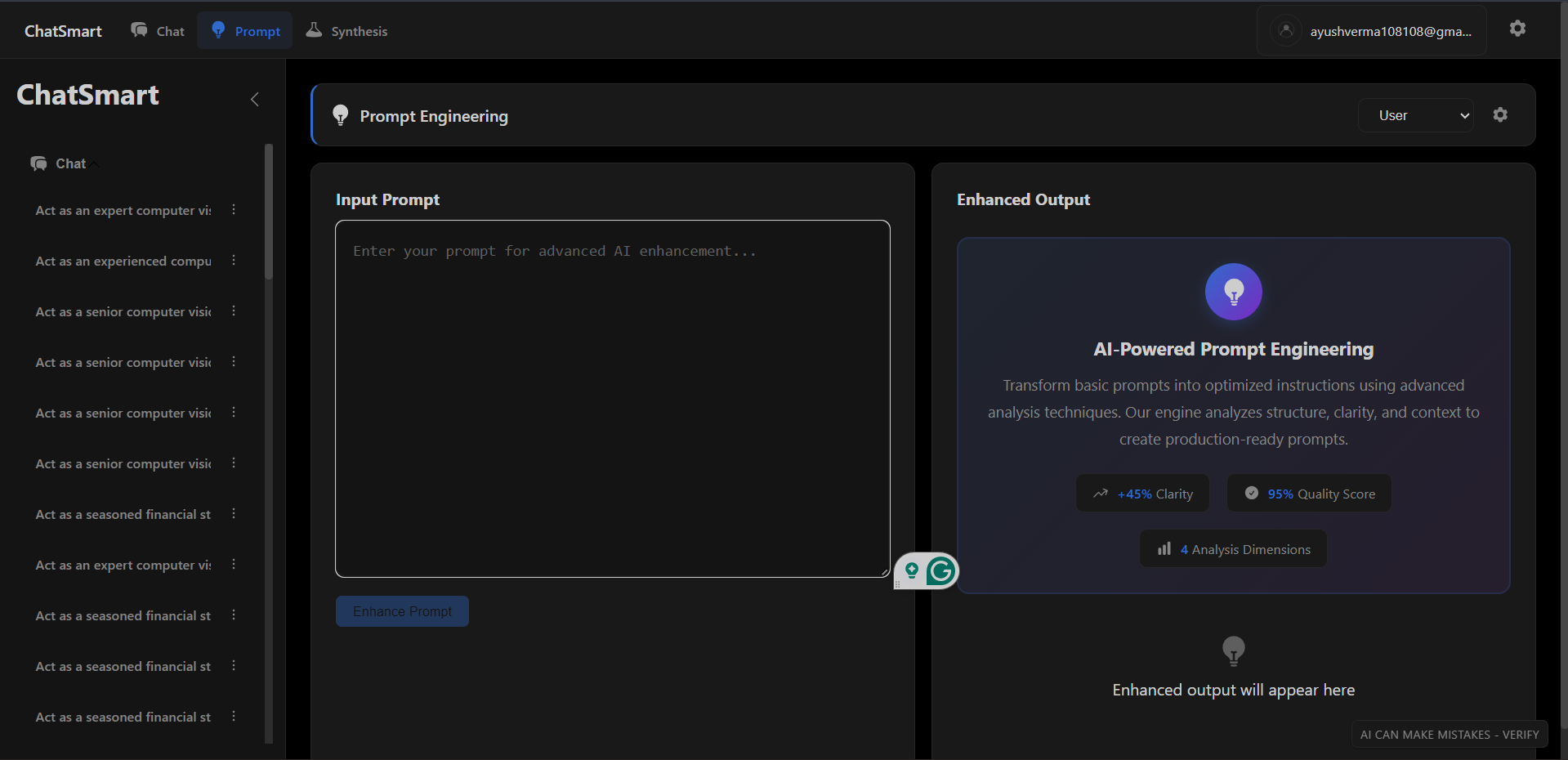

Prompt Engineering Flow

Role

Creator / Full-Stack Engineer

Period

2024 – Present

Category

ai

Overview

A portfolio-grade AI chat platform I use myself to compare and orchestrate responses across multiple LLM providers. It includes pluggable adapters (OpenAI GPT-4, Perplexity Sonar), a synthesis engine, reasoning mode, and comprehensive error handling, but it has not been released to external users yet.

Key Highlights

- Multi-model support with OpenAI GPT-4 and Perplexity Sonar adapters

- Advanced synthesis engine with token-frequency, summarization, and score-based blending

- Reasoning mode with step-by-step thinking visualization

- Production-ready auth with JWT, rate limiting, and response caching

- Full test coverage and comprehensive error handling

Tech Stack

Summary

On-page overviewThis is a concise summary of the challenges, solution, and outcome for this project. Use the Case Study button above for the full deep dive.

The Problem

I needed a reliable cockpit to compare GPT-4, Claude, and Sonar outputs for my own work, but existing tools made reproducible prompt experiments painful.

The Solution

I built a unified interface with parallel model queries, adapter abstractions, and a synthesis engine that highlights overlap and divergence so I can dogfood ideas quickly.

The Outcome

No production users yet; ChatSmart serves as my daily driver, keeps experiments reproducible, and stays ready for future client demos.

Team & Role

I owned the stack end-to-end with a short design consult. I keep iterating to make it 'invincible' before inviting anyone else in.

What I Learned

This project deepened my understanding of Python 3.11+ and FastAPI and reinforced best practices in system design and scalability. I gained valuable insights into production-grade development and performance optimization.